"The sound of kissing".

Human Interaction Categorization by Using Audio-Visual Cues

Manuel

J. Marín-Jiménez, R. Muņoz-Salinas, E. Yeguas-Bolivar and N. Perez de la Blanca

Overview

Human Interaction Recognition (HIR) in uncontrolled TV video material is a very challenging problem because of the huge intra-class variability of the classes (due to large differences in theway actions are performed, lighting conditions and camera viewpoints, amongst others) as well as the existing small inter-class variability (e.g., the visual difference between hug and kiss is very subtle). Most of previous works have been focused only on visual information (i.e., image signal), thus missing an important source of information present in human interactions: the audio. So far, such approaches have not shown to be discriminative enough. This work proposes the use of Audio-Visual Bag of Words (AVBOW) as a more powerful mechanism to approach the HIR problem than the traditional Visual Bag of Words (VBOW). We show in this paper that the combined use of video and audio information yields to better classification results than video alone. Our approach has been validated in the challenging TVHID dataset showing that the proposed AVBOWprovides statistically significant improvements over the VBOW employed in the related literature.

Press Play. Can you guess the name of the following human interaction?

These sounds have been extracted from TVHID [2]

Kiss or hug?

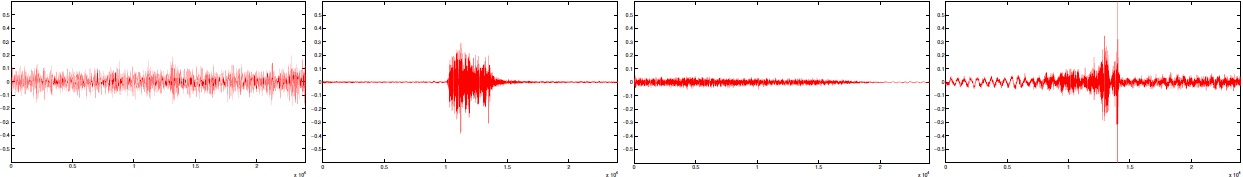

Sometimes visual information on its own is not enough to automatically distinguish between human interactions. In this figure, a and c correspond to hug, whereas b and d correspond to kiss (see below for a graphical representation of their associated audio signal)

|

|

Proposed pipeline

We cast HIR as an audio-visual problem [1].

Downloads

| Filename | Description | Size |

|---|---|---|

| sok.zip | Sequence of audio for interaction kissing | 521 KB |

Related Publications

[1]

M. Marin-Jimenez, R. Muņoz-Salinas, E. Yeguas-Bolivar and N. Perez de la Blanca

Human Interaction Categorization by Using Audio-Visual Cues

Machine Vision and Applications, 2013 (Accepted for publication)

Document: PDF

[2]

A. Patron-Perez,

M. Marszałek,

A. Zisserman,

I. D. Reid

High Five: Recognising Human Interactions in TV Shows

BMVC, 2010

Document: PDF

Acknowledgements

This research was partially supported by the Spanish Ministry of Economy and Competitiveness under projects P10-TIC-6762, TIN2010-15137, TIN2012-32952 and BROCA, and the European Regional Development Fund (FEDER).